Jeremy Kahn is a journalist at Fortune magazine, where he leads a team of reporters that cover artificial intelligence. He is the lead author of Fortune’s Eye on AI newsletter.

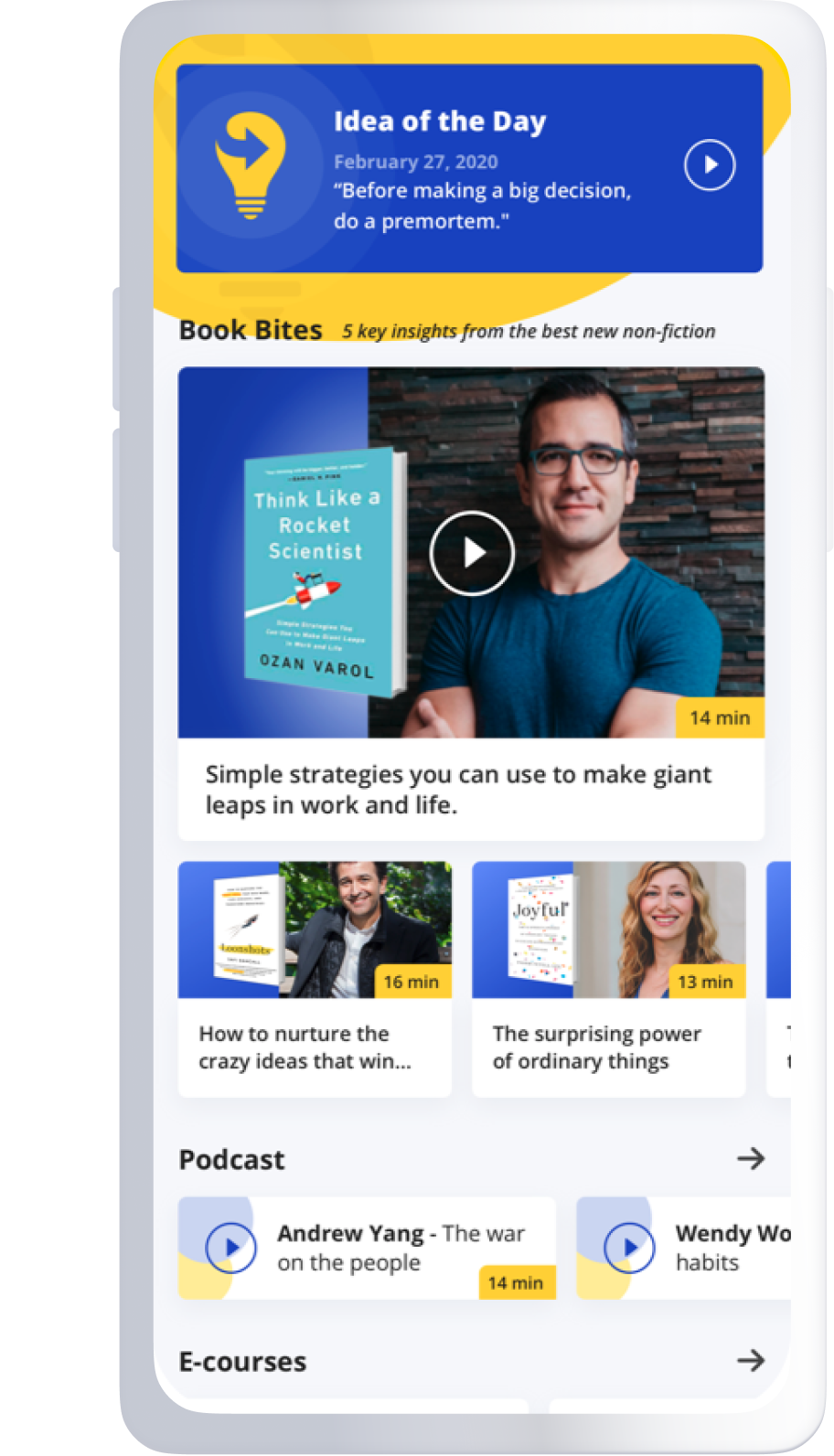

Below, Jeremy shares five key insights from his new book, Mastering AI: A Survival Guide to Our Superpowered Future. Listen to the audio version—read by Jeremy himself—in the Next Big Idea App.

1. AI will not lead to mass unemployment.

One of the most common misconceptions about AI is that it will lead to mass unemployment. This is not true—at least not anytime soon. While AI has become more capable, it cannot do all the tasks that constitute most people’s work. AI can help us do a lot of things, but it needs human supervision. We will mostly use these tools as professional assistants—copilots rather than autopilots.

For most knowledge workers—accountants, lawyers, doctors, marketing executives, software developers, consultants, architects, and analysts—demand for their collective services outstrips supply. This supply constraint is one reason people in these professions can charge so much for their labor. But as a result, many individuals and companies (small businesses in particular) cannot access these services because of their high cost.

AI changes this equation by making existing knowledge workers more productive and allowing workers who don’t have the formal qualifications or experience to work as software coders, lawyers, or accountants to take on at least some of the work these professionals perform. Increasing the supply of people who can perform these tasks should lower the cost of these services. That’s good for the economy. By allowing these less skilled, less formally educated workers to take on professional tasks, this group of people who have been increasingly squeezed out of the middle class (forced to work in lesser-paid jobs in retail or hospitality) get the chance to lift themselves back into it. That, too, is good for the economy.

Some highly paid professionals might see their earnings shrink, which could cause a backlash, but overall, AI should be good for the economy and improve income inequality.

Now, there are ways to get this wrong. If we look at AI only as a direct substitute for human labor, we will still get economic growth, but the distribution of wealth created by that growth will be extremely uneven. Meaning that income inequality would get worse. One way to guard against this is with a robot tax that would affect businesses that deploy automation and see sales grow but lay off workers at the same time. Instituting a robot tax would incentivize companies not to use AI as a means of eliminating jobs.

2. The biggest risk AI poses is to our own intelligence—both IQ and EQ.

As we come to rely on AI, there’s a danger that human cognitive and social skills will atrophy. Sociologist Daniel Bell and anthropologist Jack Goody called the tools we use to produce and disseminate knowledge “intellectual technologies.” When we use an intellectual technology, it doesn’t just change what we do or how we do it, it changes how we think. AI is the ultimate intellectual technology.

Modern neuroscience has demonstrated that our brains are very adaptable—neural pathways we use often are strengthened, and those we don’t use wither. Studies have consistently shown that relying on software can rewire our brains. When we use GPS to navigate, we pay far less attention to our surroundings. Younger people who have grown up without needing to use maps or remember a route have far worse navigational skills than older adults. Similarly, we remember less when we use Google to look up information. Dutch cognitive psychologist Christof van Nimwegen conducted research showing that when we use software to assist in solving problems, it becomes a crutch, and we never develop effective problem-solving strategies. Similarly, AI threatens to diminish our human cognitive skills. If we rely on AI to analyze for us, our critical thinking will dull. We will tend to accept the capsule answers and responses from AI.

“The act of writing sharpens our thinking.”

AI encourages the dangerous proposition that writing is severable from thinking. With AI chatbots, providing a short prompt or a few bullet points and telling the chatbot to write an essay, speech, or business strategy document for us is easy. But the act of writing sharpens our thinking. Our ideas improve when we are forced to explain them in sentences, not bullet points. Arguments come into sharper relief. We construct narratives that make our points more salient and memorable. Jeff Bezos, the CEO and founder of Amazon, banned PowerPoint presentations because it encouraged lazy thinking and critical information got lost in the slide decks. AI could make this tendency to reduce thought and expression to bullet points far worse.

Our social skills could be damaged by AI usage, too. Some people are proposing that AI chatbots could cure loneliness or aid mental health by delivering therapy. Already, some people are using chatbots for companionship and even romantic and erotic fulfillment. Dialogue with a chatbot may sound convincingly human-like, but our relationship with this software is decidedly one-sided. The chatbot has no needs, makes no real demands, rarely challenges us, and tolerates abuse. Interacting with a chatbot does not teach someone to build meaningful and lasting human relationships. Dialogue with a chatbot is a lot easier and less messy than real human relationships. It would not be surprising if some people prefer chatbot companions to real human friends or lovers. However, this will increase social isolation.

AI might not only degrade our intellectual prowess, IQ, but our emotional intelligence, EQ, as well. Intellect and emotional skills sit at the heart of what makes us human. We should not let them atrophy.

3. Training people will be more important than training AI.

If you’ve been following news about AI, you will have heard a lot of discussion about what data was used to train different AI software. Training data for AI is important for a number of reasons, including concerns about data privacy, copyright, and the biases that AI models may learn from the data. But how we train people to use AI is more important.

Soon, AI will assist with many tasks in work and daily life. Learning how to work alongside AI effectively will require some new skills. We will need to learn what tasks are best handled by the model and which we ought to reserve for ourselves. We will need to learn how best to provide a set of instructions to the AI software—what people in the AI field call a prompt—so that the software does what we want. For both of those things, we will need a good understanding of how the AI system works: its strengths and weaknesses.

NASA has given considerable thought to how people can best work alongside automated systems. The space agency knows that on future missions to Mars (a journey of at least six months), astronauts will need to rely on many automated systems, including AI assistants. NASA has been researching how these assistants can best communicate information to astronauts.

“We often take a lot longer to realize when software automation fails than when a mechanical system breaks.”

They are particularly concerned about two human cognitive biases that tend to affect people interacting with technology. One is called automation bias: The tendency to think that the computer always knows best and to rely on its suggestions even if they contradict other data—including our own senses— that should be telling us that the computer might be wrong. The other bias is called automation surprise. This occurs when an automated system goes wrong. We often take a lot longer to realize when software automation fails than when a mechanical system breaks. Our confusion in these circumstances can give way to panic, leading people to take actions that make the situation worse.

NASA has discovered that the best way to overcome both cognitive biases is by giving astronauts a “mental model” of how an AI system works and its limits. NASA uses frequent simulations to train its astronauts and build that mental model before it ever pairs AI with astronauts in a real spacecraft. This is something many companies should consider: using training courses and hands-on simulations to make sure people grasp what AI can do, what it can’t do, how best to use it, and what to do if it fails.

4. AI’s biggest positive impacts will be in education and medicine.

When ChatGPT came out, teachers panicked. Students were using the chatbot to cheat, and educators were full of dire predictions. One commentator declared AI had “killed the college essay.” Several major school districts in the U.S. banned access to ChatGPT. Universities in Australia said that to prevent AI-assisted cheating, they would revert to in-person, hand-written examinations. It is certainly true that some students have used AI to cheat. But the technology has the potential to transform education for the better.

AI can put a personal tutor in every student’s pocket, providing one-on-one support to which only a few students have access. This, in fact, was a vision Apple founder Steve Jobs laid out in a now-famous speech he gave in Sweden in 1985. Jobs talked about the personal computer’s potential to one day allow students to learn from Aristotle, who had been Alexander the Great’s personal tutor. Today, students can ask an AI chatbot to role play as Aristotle, Leonardo da Vinci, Frederick Douglass, or any historical figure and converse with them.

To get the value from AI in education, educators will need to change how they teach. They will need to institute flipped classrooms, where the part of education that involves simply receiving information is done at home—either through video-recorded lectures or assigned reading—while class time is reserved for problem sets, written assignments, lab work, and, crucially, for small group discussion. AI can help here, too. While the teacher makes their way around the classroom, providing individual feedback and support, AI can be the ultimate teaching assistant, providing personalized support to the other students. Insights from AI tutors can help human teachers better target individual interventions. AI can help teachers plan lessons and create supporting material, such as worksheets or quizzes.

“Today, students can ask an AI chatbot to role play as Aristotle, Leonardo da Vinci, Frederick Douglass, or any historical figure and converse with them.”

The other area where AI can have positive impacts is in medicine. AI is already helping scientists discover new drugs in record time. It has done so by helping to unlock how DNA sequences determine the shape of proteins. Some of these drugs are far more potent, with fewer harmful side effects than those discovered using more traditional methods. The same kinds of large language models that power ChatGPT are being trained on vast libraries of biological and chemical data. One day soon, scientists may be able to specify the kind of drug they want in plain English and get a DNA or chemical recipe for creating that drug. AI is also poised to enable personalized treatments tailored to individual DNA profiles. Combined with the increasing use of wearable and implantable medical sensors, we and our doctors will be able to review far more data collected continuously about our own health and intervene earlier and more effectively to head off disease or injury. While there are some risks in AI’s application to life sciences —including a slightly elevated risk of bioterrorism—the positive impact AI will have on health far outweighs these concerns.

5. We still have the power to shape the development of AI. We need to use it.

Too often, people see technology as something that happens to them, about which they have no choice and no agency. That’s wrong in general, and it’s especially wrong when it comes to AI. This is still a nascent, developing technology. We have tremendous power to shape the course of its development—even if we aren’t working at a tech company.

Our power comes from being consumers, customers, employees, and citizens. No one will force us to use AI software to substitute our own creativity or expression of emotions when it comes to tasks such as writing a parent’s obituary or designing a birthday card. No one is going to force us to interact with a chatbot daily instead of seeking out real human connection. It is up to us not to be lazy and fall into these traps.

Companies may increasingly mandate that we work with AI copilots at work. But we have tremendous power as employees—especially as this technology is just taking shape—to tell employers how we want this technology used and what guardrails we want put in place. We need to make our voices clear that AI must augment human workers, not replace them.

Finally, we have tremendous power as citizens and voters to demand that government regulates AI. Nationally, we need a strong agency to oversee AI development and ensure it doesn’t pose undue risks. Policies like a robot tax could encourage businesses to complement human labor with AI. Internationally, we need standards and an agency to help all countries deploy AI safely and call out those that don’t. Achieving this governance is challenging but possible. We’ve successfully regulated nuclear, biological, and chemical technologies before. We must seize our opportunity to govern AI. The only way AI will wind up controlling us is if we don’t seize the chance to shape its development. By using our human agency and intelligence, we can master AI and ensure a superpowered future.

To listen to the audio version read by author Jeremy Kahn, download the Next Big Idea App today: